Smart Microsystem Laboratory: IMU-Based Navigation with Dead Reckoning

Smart Microsystem Laboratory: Autonomous Sailboat

MSU Formula Racing Team: Electronic Throttle Body Controller

PUMA and NDE Labs: Low-Cost Digital Image Correlation

MSU Mobility Center: MSU Green Mobility App

General Motors: One Third Octave Band Equalizer

MSU 3D Vision Lab: In-Farm Livestock Monitoring (SIMKit v.2)

MSU Unmanned Systems: Aerially Deployable Autonomous Ground Robots

Texas Instruments: Classification with mmWave Sensors

MSU Resource Center for Persons with Disabilities: SCATIR Switch for People with ALS

MSU RCPD/OID/Tesla Inc./Lansing SDA Church: Off-Grid Hospital Power System

MSU Human Augmentation Technologies Lab (HATlab): Platform to Assess Tactile Communication Pathways

National Aeronautics and Space Administration (NASA): Inner Solar System Array for Communications (ISSAC)

MSU Brody Square: Robotics in a Residence Hall Dish Room 4.0

MSU Electromagnetics Research Group: Object Triangulation using Ultrasonic Sensors

Machine Learning Systems: Smart Phone App for Cancer Symptom Management

MSU Spartan Innovations: Mobile Universal Translator

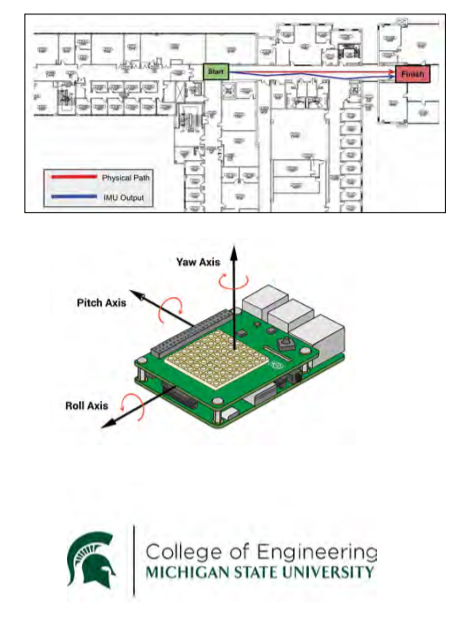

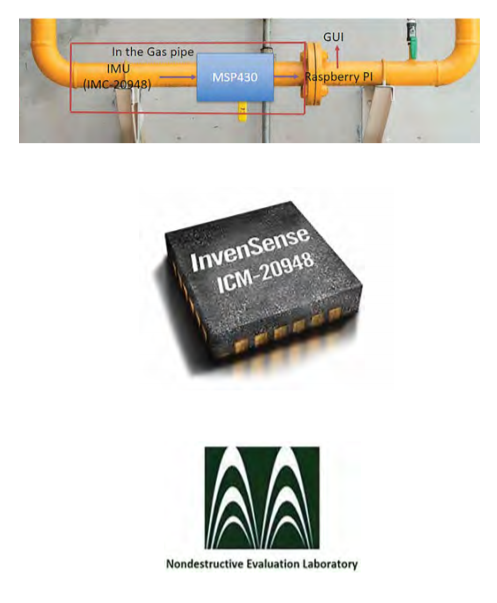

MSU Nondestructive Evaluation Laboratory: Raspberry Pi Controlled IMU Based Positioning System

Smart Microsystems Laboratory: IMU-Based Navigation with Dead Reckoning

Inertial Measurement Units (IMUs) are collections of sensors

capable of determining an object’s change in location, orientation, or pose, in three-dimensional space. IMUs are a useful navigational tool. For example, IMU sensor data may be used in conjunction with Global Positioning System (GPS) sensors to determine an object’s coordinates more accurately. IMUs can also be used independently to estimate pose in locations where GPS data is not consistently available, e.g., tunnels, underwater, etc.

Dead reckoning refers to the use of pose estimates (such as

those provided by IMUs) when navigating in three-dimensional

space, often without authoritative third-party information such

as GPS location. Dead reckoning has many potential commercial

applications, including autonomous cars, unmanned aerial systems, remotely operated vehicles, and biometric sensor vehicles, such as those being developed at the Smart Microsystems Laboratory.

The overall goal of this project was to evaluate various inexpensive, off-the-shelf IMUs; compare the performance of several denoising/filtering algorithms when applied to IMU data; and determine the potential capabilities of these IMUs and algorithms when applied to simple dead reckoning algorithms.

In order to meet these objectives, a device was designed which integrates a platform base, IMU, and a camera into a single system; acquires IMU sensor data and AprilTags ground truth data; and offloads that data to a base station computer. This base station then applies denoising, filtering, and processing algorithms to accelerometer and gyroscope data from the IMU; estimates pose using the “cleaned” data; and displays the data in a custom threedimensional data visualization inside a web application.

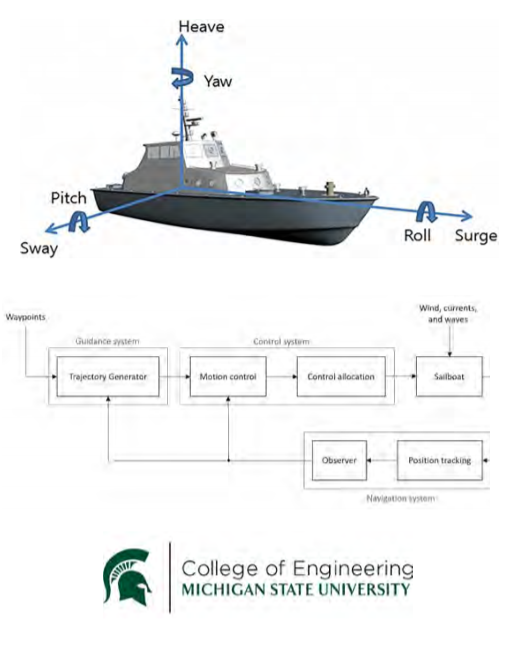

Smart Microsystems Laboratory: Autonomous Sailboat

The Smart Microsystems Laboratory (SML) was established in the Fall of 2004. SML focuses on improving integrated systems by incorporating advanced modeling, control and design methodologies with novel materials and fabrication processes. SML research plans span the wideranging areas of control, dynamics, robotics, mechatronics, and smart materials. SML’s research concentrates on electroactive polymer sensors and actuators, modeling and control of smart materials, soft robotics, bio-inspired underwater robots, and underwater mobile sensing.

One aim of the SML is to create a network of unmanned

aquatic surface vehicles, specifically autonomous sailboats, primarily intended to be used for mobile sensing. These applications include, but are not limited to, weather data acquisition, population monitoring, or geological observations. The concept is to create a device that can autonomously observe the surrounding environment while adapting itself allowing unaffected data.

Our team was assigned to advance the waypoint tracking control of the sailboat in an indoor setting. Given the desired waypoints and the current wind conditions, we developed a control strategy that is implemented in hardware to properly steer the sail and the rudder. Our team will fashion a graphical user interface enabling inputs of waypoints and visualization of waypoint tracking.

Due to the nature of the aquatic environments, maintaining a sailboat may disturb the observable data acquisition process. Understanding the dynamics of a sailboat, wind direction and water currents are two of the most vital aspects to adapting to the environment.

MSU Formula Racing Team: Electronic Throttle Body Controller

Formula SAE is a global collegiate design competition

that challenges students to design, manufacture, and race small, open-wheel racecars against other universities around the world. The competition is focused around five dynamic events: skid pad, acceleration, autocross, endurance, and efficiency.

The objective of the Electronic Throttle Controller

(ETC) is to increase performance and drivability of Michigan State University’s Formula SAE racecar. Benefits of an ETC system over a traditional cable driven system include more precise throttle control, programmable throttle curves, and the ability to include control algorithms. The ETC system is made of various sensors, a controller, and an electronic throttle body (ETB). Sensors in the system include accelerator pedal position, throttle blade position, and brake pressure. These sensors are needed to ensure the functionality and safety of the system. The focus of this project lies in the design of the control board.

The control board integrates with the rest of the MSU Formula Racing Team’s vehicle via a Controller Area Network (CAN) communication bus. Using data from the vehicle and sensors, the controller uses control algorithms programmed via Simulink to control the ETB to the correct position.

By creating a custom ETC system, rather than buying off-the-shelf systems, the MSU Formula Racing Team has more control over the functionality of the system. The custom design of the ETC controller allows for complete integration with the rest of the systems on the vehicle.

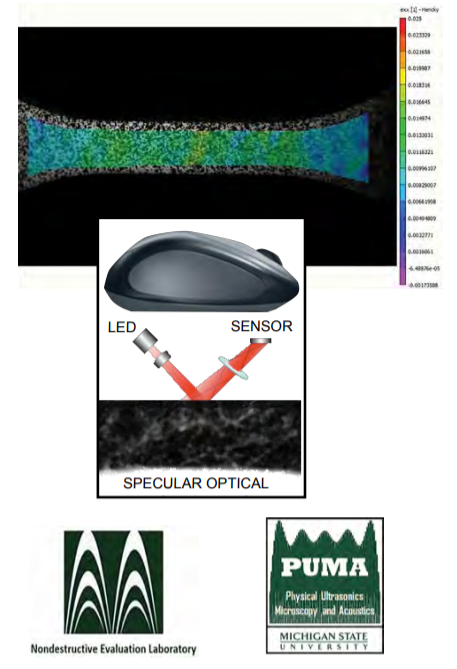

PUMA and NDE Labs: Low-Cost Digital Image Correlation

Digital image correlation is a technique that is used for

measurement of deformation and strain in materials. The

technique combines image registration as well as tracking

methods for accurate and efficient measurements of changes in

images. Deformation and strain measurement are key components of materials testing and DIC allows visualization of strain maps in critical components. This will allow engineers to predict the behavior of a part made from a material during process operations.

One of the fundamental and basic concepts of DIC is comparing two images of a component before and after deformation. Before the DIC process begins, a speckle pattern (or tracking pattern) is first applied to get a reference image, and a subset of this pattern is selected for tracking. The center of the subset on the reference image will be the location where the displacement will be calculated. Once the material has been deformed from the reference image’s initial position, the subsets from both the deformed image and reference image are matched. After this, the DIC will calculate the displacement between the two images.

The purpose of this project is to develop a low-cost alternative

to current DIC modules used for strain testing. The goal is to use

the functionality of an optical mouse to efficiently and effectively develop hardware and software to detect and track speckle patterns as the mouse travels along a surface. The mouse and laser have built-in hardware that is capable of DIC as the tracking of movement is already incorporated in its design. Our project will involve making use of this technology to develop a small and inexpensive yet effective DIC module for our customer. The approach involves use and implementation of a microcontroller in conjunction with C++ programming as well as DirectX software to model the displacement of the speckle pattern.

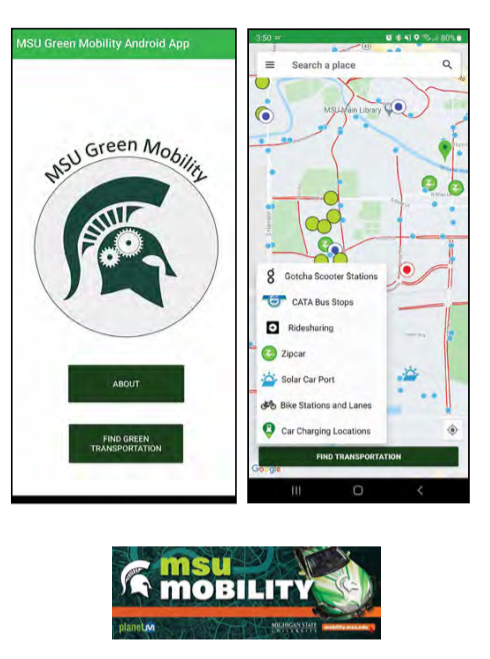

MSU Mobility Center: MSU Green Mobility App

The MSU Mobility Center monitors the flow of people and vehicles across campus, focusing on creating systems of transportation to ensure students and faculty stay safe and move efficiently while on campus. They currently aim to reduce CO2 emissions and vehicle count on campus while increasing student flow especially between class periods.

Our MSU Green Mobility App provides a way for the MSU

community to learn about and utilize “green” modes of transportation. “Green” modes can be characterized as a method of transportation that does not rely on burning fuels or other natural resources. This helps control the releasing of greenhouse gas and therefore control the pollution and protect our living environment and ecosystems.

Some of the primary milestones for this project consist of implementing a routing feature to make the app more practical in day-today activities, adding Lyft as a ridesharing option, and polling accurate, real-time information for both the CATA buses as well as the Amtrak train station.

With the MSU Green Mobility App, the user can easily interact with the local map which provides several options for green transportation on campus. For each option, the user can determine the distance, the “greenest” way, the cost, and how long it will take to reach the destination. Depending on the option that the user selects, all possible routes will be displayed.

The ultimate goal for our MSU Green Mobility App is to make MSU more eco-friendly by providing students and faculty with information about the “green” modes of transportation available on campus and how they can utilize them. If just 5% of people start utilizing these options instead of driving, CO2 emissions would go down, MSU could save money on parking structures, and students choosing to walk will be able to get across campus much easier.

General Motors: One Third Octave Band Equalizer

With electric vehicles across the horizon, General

Motors’ mission is to bring everyone into an all-electric future by 2025, leading the world in EV research and assembly. GM is headquartered in Detroit, Michigan and is also known for brands like GMC, Buick, Cadillac, and Chevrolet, selling 7.7 million automobiles combined in 2019.

Sound is one of the most important characteristics of

vehicle safety for pedestrians, and all hybrid and electric vehicles must adhere to minimum audible sound requirements established by global regulations. While next-generation vehicle sounds are produced with those regulations in mind, equalizers built into digital audio workstations (DAW) lack the necessary precision to amplify and attenuate specific frequency bands to better accommodate for pedestrian safety and driver comfort.

The focus of our one-third octave band equalizer tool will be to provide an easily integrable plugin for DAWs like Logic Pro that will analyze a waveform audio file format (WAV) file, modify their response via an equalizer with 1/3 octave precision, and export the modified audio data as a WAV file without loss of quality. The analyzer displays frequency response in a linear scale to provide the necessary precision needed by GM and streamline the audio creation process for sound engineers.

The equalizer plugin is developed in C++ using JUCE for the front end interface and back-end functionality, while a separate, standalone program is developed using Python scripts to simulate the user interface and functions developed within the JUCE framework.

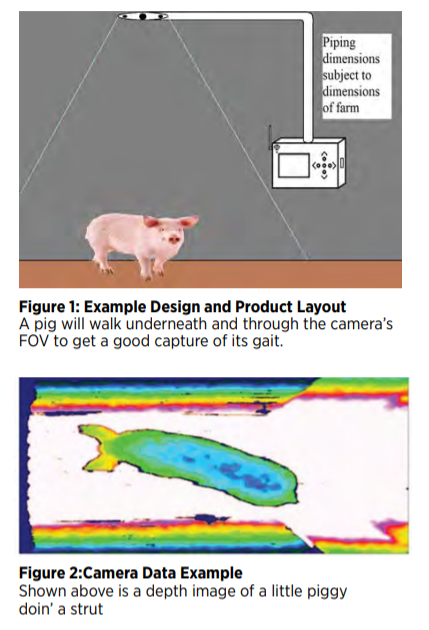

MSU 3D Vision Lab: In-Farm Livestock Monitoring (SIMKit v.2)

3D Vision Lab is based out of Michigan State University and focuses on research involving Automated Vehicles, Smart Agriculture, and Tremor Tracking technologies.

Our team has designed a livestock monitoring system to be used in barns to monitor the health of pigs. The device monitors livestock gait and body size in order to be analyzed by a third-party lab. This device is the second of its kind and offers a higher resolution and framerate than the previous design, as well as the ability to upload data to a cloud storage via Wi-Fi.

In addition to adding Wi-Fi capabilities to our device, this newer SIMKit will feature an Azure Kinect RGB-D camera to enable us to get high framerate output footage.

Upon completion, the tool will greatly reduce the amount of time and effort needed to transport this data to be analyzed, as well as offer better data points for farmers, leading to better care of their livestock.

We will create a 3D printed box to hold the screen, computer, buttons and Wi-Fi module in a watertight enclosure.

MSU Unmanned Systems: Aerially Deployable Autonomous Ground Robots

The objective of this project is to build a dropping mechanism

that deploys an autonomous ground vehicle. The dropping

mechanism will attach to a prebuilt drone provided by the

Unmanned Systems team. The system will be used in the 19th Annual Student Unmanned Aerial Systems Competition (SUAS). One of the goals of this competition is to help further the field of autonomous package delivery.

The total payload drop cannot exceed 64 ounces, while 8 ounces

of that is dedicated to carrying a water bottle. The drop mechanism will be a servo that releases a pin. The ground vehicle will then descend to the ground with a parachute slowing the fall. Once the ground vehicle has landed safely it will release the holding device for the parachute and then drive to its coordinates provided on competition day.

The main hardware for the ground vehicle will include a body, treaded tires, motors, Raspberry Pi, GPS system, suspension systems, gyroscope sensor, and other basic parts for the RC style operation. The components will mainly communicate through Python and include ROS library. The main risks involved in this overall mission include the ground vehicle not landing safely and the vehicle not being able to drive to its provided coordinates. To ensure neither of these situations occurs there was rigorous testing on the release system and parachute as well as the program functioning. The weather conditions will also be a factor, so the system was tested in various winds to ensure successful deployment.

Successful development of this system will lead to a deployment from 150 feet in the air and a short autonomous drive to the final coordinates. This will be showcased in the SUAS competition for Michigan State’s Unmanned Systems team and will have the opportunity to place against other developed autonomous systems competing.

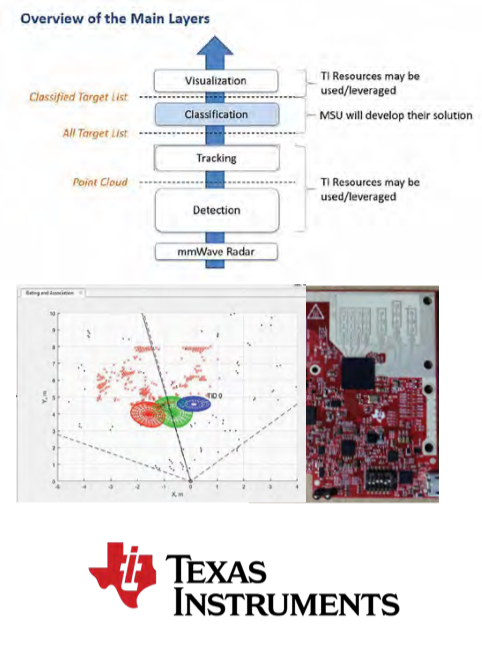

Texas Instruments: Classification with mmWave Sensors

The goal of this project is to improve and expand upon the

Texas Instruments mmWave radar tracking technology.

This mmWave radar technology operates by radiating an

electromagnetic wave signal (60 – 64 GHz), which reflects off

various objects within reach of the device. This reflected signal contains information about the object from which it was reflected, such as the velocity of the object, the angle relative to the sensor, and the range from the sensor.

mmWave sensors are effective in detecting moving objects in

a scene but alone are incapable of determining the type of object detected. Algorithms can be developed to work in coordination with mmWave sensors to successfully classify such objects.

Based on this information, the sensor in coordination with the

algorithm can categorize the object as human or as another object in motion. Further development of mmWave technology will allow this to have widespread applications in aerospace radar systems, autonomous driving, ethical surveillance systems where privacy is of concern, and more.

We are expanding upon the work previously completed by TI and previous senior design teams at MSU. This involves improving the Support Vector Machine algorithm to enhance object classification performance and accuracy. Additional data is captured to continue training the algorithm. Object classification is being expanded from a binary system where moving objects are classified as human/non-human to a multi-class system where moving objects can be classified as either human, pet, and neither. Each classification category requires its own collection of data to train the algorithm. This data allows the algorithm to familiarize itself with features and characteristics of like objects to group them into labeled categories.

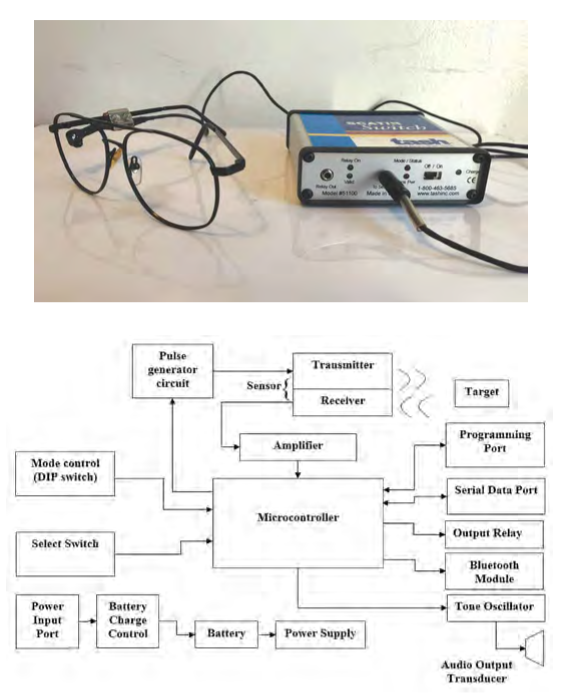

MSU Resource Center for Persons with Disabilities: SCATIR Switch for People with ALS

The MSU SCATIR (Self Calibrating Auditory Tone Infra Red) Switch is an assistive technology that is currently in use particularly by people with ALS. The SCATIR Switch was invented by Stephen R. Blosser and John B. Eulenberg. The SCATIR Switch’s eye sensor detects “blinks” from the user wearing wire glasses. This Switch can then be connected to an output (switch closure or relay) so that the user can perform various tasks.

Our team is creating additional accessibility and

compatibility features on the SCATIR Switch. We are adding a wireless Bluetooth output to allow for wireless connection. This will allow the user to connect to other devices such as computers and phones to perform a ‘left click’ function. This will increase the scope of use and accessibility for the SCATIR Switch.

Additionally, we are adding a micro-USB/ USB-C charging port to make charging more compatible for users. The device currently uses a wall transformer for charging, so replacing it with a USB charging port will increase ease of charging for the user.

We are creating additional features on the SCATIR Switch to increase availability and functionality for users.

MSU RCPD/OID/Tesla Inc./Lansing SDA Church: Off-Grid Hospital Power System

In Bombardopolis Haiti, a hospital and training center is being developed where little to no electricity is available. Our task is to design a power system with specific components such as batteries, inverters, solar panels, wind generators etc. Lithium batteries are to be used as opposed to lead-acid batteries for a variety of reasons.

We have also been tasked with designing and building

a monitoring system that will measure the status of the

batteries and other potential variables and monitor these statuses on an app that will allow one to monitor the hospital power system. We will be using Solar Edge components to design our system.

MSU Human Augmentation Technologies Lab (HATlab): Platform to Assess Tactile Communication Pathways

The Michigan State University Human Augmentation Technologies (HATlab) seeks to develop a new device that bypasses visual and audio sensory channels to enable high-speed, machine-to-human communication via tactile (skin) sensory pathways. Broadly speaking, it is believed that by focusing on autonomous sensory microsystems, HATlab can integrate these technologies into a diverse range of external fields to provide maximum benefit for a targeted user base.

Building on the success of the Fall 2020 semester capstone team’s project, our team focused on developing a new testing platform suitable for increasing tactile message comprehension to 20 discrete inputs. We then assessed the

performance humans exhibit in decoding tactile messages while completing

game-like tasks to quantify the degree to which these competencies can proliferate in a controlled environment.

As a stepping-stone for emerging technologies, this project delivers an application which utilizes tactile feedback through game controllers to support the axiom research goals for the HATlab team. To accomplish this, the project included the design of a sequential task game, or STG, for training users how to recognize and decode tactile messages into assigned game inputs. Combining game theory and the psychologies associated with retention and learning, the STG environment was designed to maximize the memory potential for the user in order to optimize player memory retention.

In testing human retention, this project sought to quantify the limitations (if any) to tactile communication pathways for the average individual. By doing so, the data and platforms provided by our team will inform future research to allow for greater expansion into this exploratory field of neural engineering.

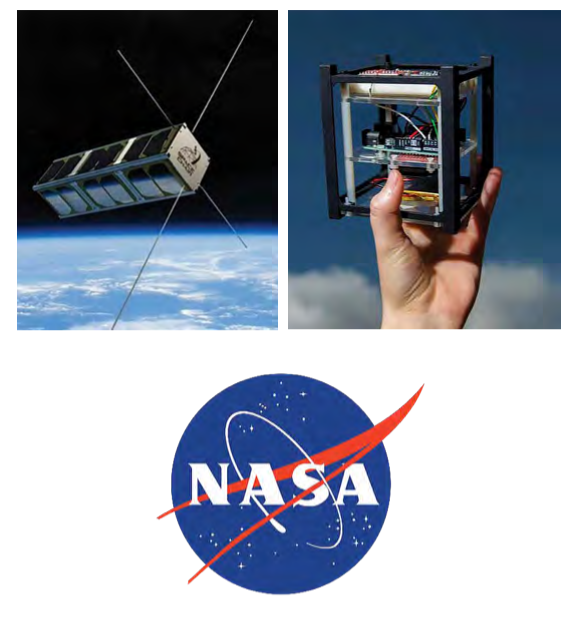

National Aeronautics and Space Administration (NASA): Inner Solar System Array for Communications (ISSAC)

The National Aeronautics and Space Administration, otherwise known as NASA, is no longer the sole American actor in the exploration and execution of missions in space. Commercial partners like SpaceX, Lockheed Martin Space, and Astrobotic are all investing in hardware intended for operation off Earth. These kinds of operations require long distance communications that can rely on existing networks, often competing for limited availability.

In order to create a demo for this project within this semester’s timeframe, we propose creating a Unity simulation of the CubeSat network. The network will be an array of many satellites in an ad-hoc configuration, and we plan on creating a simulation of said network which can be used by future groups working on this project.

We will create this scale simulation that will allow users to input parts such as antennas, power, and RF modulation coding scheme, just to name a few. The simulation will allow users to see the advantages and disadvantages of using certain parts. With outputs like range, number of CubeSats required to reach a certain point, data rate, and link margin, users will be able to easily find the perfect part for their CubeSat build.

The most important output of our simulation is the link margin between two points of communication. The link margin is the difference between the minimum power received by the receiver and the receiver sensitivity. This difference determines if the current configuration will work at a given distance. NASA requires a minimum link margin of 3 dB in all of their links to meet data rate requirements and improve resistance to dropped packets.

MSU Brody Square: Robotics in a Residence Hall Dish Room 4.0

Michigan State University’s Brody Cafeteria provides upwards of 1.6 million meals per year. As a result, the current process of sorting silverware requires several employees at a time in a chaotic and humid environment. With automation, staff members can divert their energy elsewhere creating both a better work environment and customer experience.

Our project, which is a continuation of previous semesters’ projects, is to improve on an existing prototype by increasing sorting efficiency and accuracy.

The current prototype utilizes a conveyor belt in order to

transport silverware underneath a camera. The camera then transmits the image to a computer which runs vision-based object recognition in order to determine the type of silverware.

In order to improve the efficiency of the machine, our team has proposed both hardware and software designs. From a hardware perspective, the sorting efficiency can be improved by increasing the speed of the conveyor belt. In order to achieve this, an additional motor circuit would be installed on the side opposite from the initial motor. Another benefit of the motor would be that the force on the belt will be equalized, preventing an issue of the belt slipping and becoming stuck.

From a software perspective, in order to improve sorting accuracy, possible changes involving the sorting algorithm would need to be implemented. Currently, the software detects the length of the silverware and classifies it as either a fork, knife or spoon. Issues with accuracy arise from situations with silverware in different orientations and multiple pieces at once. Proposed designs include modifying the parameters of the silverware classifications and implementing image comparison software. Image comparison would utilize a directory of still images of silverware and compare it with footage captured by the camera module

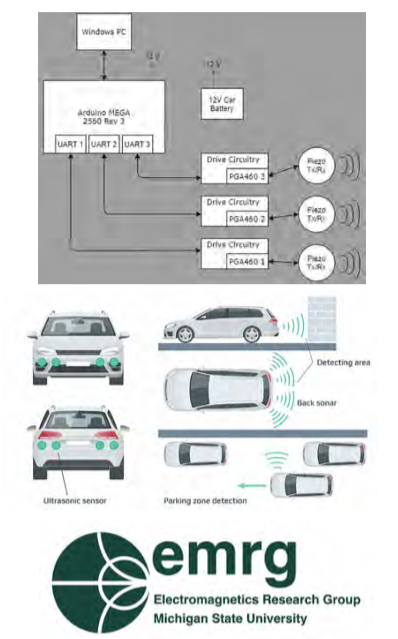

MSU Electromagnetics Research Group: Object Triangulation using Ultrasonic Sensors

The Electromagnetics Research Group (EMRG) at Michigan State University conducts research across a broad range of both fundamental and applied topics, including projects that are focused on developing

computational methods for analysis of fields across the electromagnetic

spectrum. They also analyze and research sensors and antennas to develop new techniques that help improve electromagnetic field analysis. This project has been sponsored by MSU EMRG in order to further their work in the field of ultrasonic sensing and triangulation.

Ultrasonic-based accurate sensors for direct 3D positioning and orientation measurements are needed for a range of applications, such as autonomous vehicles, liquid flow sensors, industry settings, robotics, etc. For a platform (e.g., automobile) that requires knowledge of the object’s detailed position for virtual mapping of the environment, such as an autonomously mobile robot, triangulation is required for the robot to move safely and accurately.

The goal of this project is to provide a triangulation solution to track an object’s location using ultrasonic transducer sensors. These transducers are used to triangulate the location of an object within a specific area. The location of the object should then be mapped onto a computer display that accurately maps the object’s position.

This project primarily utilizes piezoelectric transducers that are able to both transmit and receive signals for object tracking. Piezoelectric sensors are devices that can produce electricity when exposed to changes in the environment, usually in form of vibrations. After transmitting a signal, the transducers will then listen for the vibration of that signal response. The vibrations then get converted into electrical power to be used to map the object’s position onto a display. The use of three piezoelectric sensors for object triangulation improves sensor accuracy in comparison to more common systems designed with only one or two sensors.

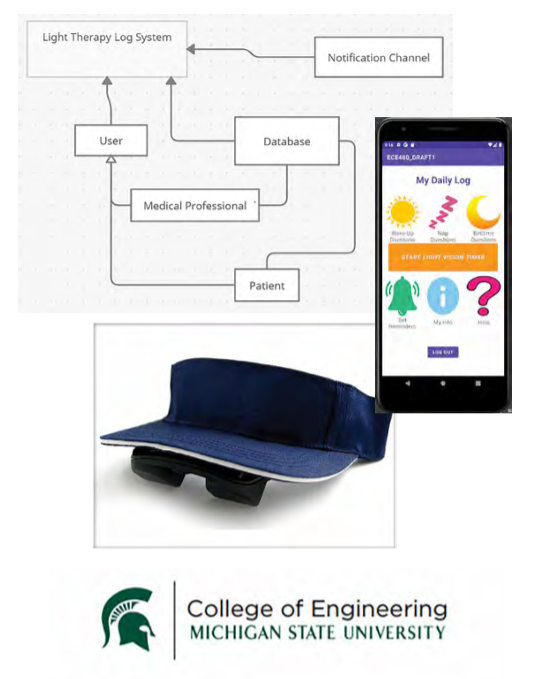

Machine Learning Systems: Smart Phone App for Cancer Symptom Management

Machine Learning Systems (MLS) is a group at

Michigan State University that works on topics at the intersection of systems and Artificial Intelligence (AI), with current focus on On-Device AI for mobile, AR, IoT (TinyML), Automated Machine Learning (AutoML), Federated Learning, Systems for Machine Learning, Machine Learning for Systems, and AI for Health. The director of MLS, Dr. Mi Zhang, partnered with our team to create an Android app for cancer symptom management.

Sleep/wake disturbance and fatigue, two of the most

debilitating symptoms, commonly occur in cancer, especially during chemotherapy. These two symptoms are known consequences of circadian rhythms disruptions due to cancer. Because light is the most potent external cue coordinating circadian rhythms, it can be used to help relieve sleep/wake disturbances and fatigue resulting from disruption of these rhythms. A personalized bright light therapy will offer cancer patients a non-pharmacological option in managing sleep/ wake disturbance and fatigue.

This app contains an automated alert functionality to help monitor and enhance adherence to the prescribed light therapy. Specifically, the smartphone app can be programmed for the time of the day the individual is prescribed to receive the light intervention. A text reminder is sent through the app at the prescribed time each day. Real-time data is provided by a touch-screen timer that records start and end times of each light therapy session.

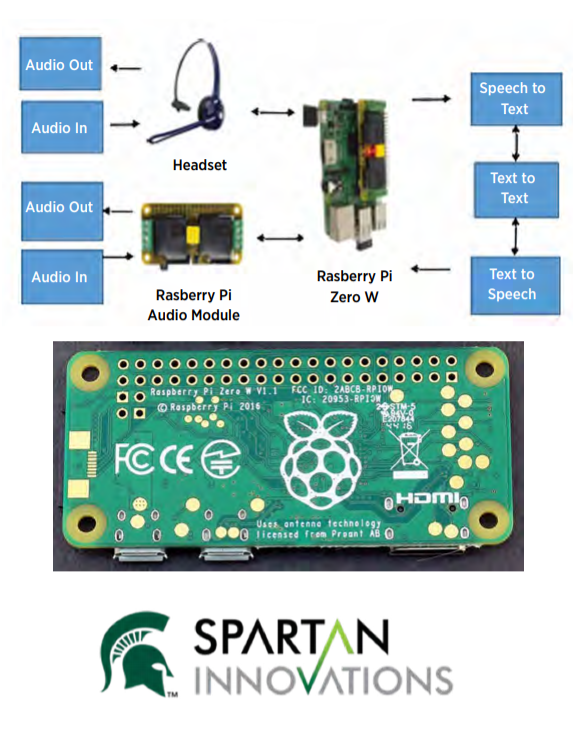

MSU Spartan Innovations: Mobile Universal Translator

Spoken language is one of the defining characteristics of human beings. Humans have used it to communicate for over 60,000 years. Throughout that time many different languages have emerged in different parts of the world. Today there are over 7,000 languages, and if you add up the number of people who speak the 23 most popular languages, you only account for half of the world’s population. This creates a need for translation devices especially in today’s ever-connected world.

The goal of this project is to create a device that allows for quick and easy translation between languages. The device should be able to work both with and without a Wi-Fi connection depending on the circumstances of the user. The device is meant to be used in both situations of leisure and emergencies. For example, it should be able to be used by medical professionals and law enforcement in instances where a human translator cannot be located quickly.

The device will use a Raspberry Pi to hear and translate what is being spoken to the user. The translation will then be played by a Bluetooth earpiece the user is wearing. The user will then talk into the earpiece, and the audio will be translated and played via the Raspberry Pi.

This project is the first of its kind for the College of Engineering. Jacoria Jones, an MSU student in ESHP 170, Business Model Development, had the vision but not the technical background to complete the project. She presented the idea to the university who brought it to us.

MSU Nondestructive Evaluation Laboratory: Raspberry Pi Controlled IMU Based Positioning System

Natural gas makes up almost a third of energy production

in the United States. Natural gas pipes are abundant and

used to move gas from production to plant or even into

consumers’ homes for heating. With all of these gas pipes laid underground, upkeep and evaluation become imperative for properly functioning natural gas systems. The Natural Gas industry uses small remote industrial inspection robots that enter the buried pipes and can navigate and look for cross-bores, defects, and wear. However, since these robots are underground, they lose a large amount of their connectivity, similar to a car entering a tunnel and losing GPS signal. This means that they cannot count on accurate GPS readings.

Our team’s solution to this problem will be to design a low cost and low-power positioning system using an Inertial Measurement Unit (IMU), microcontroller, and Raspberry Pi 4. This system will allow for accurate location estimates to be displayed to the user based on filtered and manipulated IMU sensor data. Using this system, the industrial inspection robot will be able to navigate the gas pipes with precise location information without the use of a GPS.

The IMU that our team will be using has a 3-axis accelerometer, 3-axis gyroscope, and 3-axis magnetometer that will allow for 9-axis measurement sensor data that can be combined for maximum location accuracy. This raw data will be processed using an algorithm developed by our team to filter out noise, integrate the various sensor data, and generate an accurate location estimate that will be displayed using a graphical user interface through the Raspberry Pi. The algorithm will be based on a Kalman filter with additional improvements made from modern research conducted in the area.