Smart Microsystems Laboratory: IMU-Based Navigation with Dead Reckoning

MSU Office of the VP for Auxiliary Enterprises: Robotics in a Residence Hall Dish Room 3.0

MSU Resource Center for Persons with Disabilities: Roadside Electric Scooter Detection and Alert System

Consumers Energy: Simulation to Optimize Power Grid Performance

MSU Department of Electrical & Computer Engineering: Human-Aerial-Swarm Interaction System

MSU Human Augmentation Technologies Lab (HATlab): Platform to Assess Tactile Communication Pathways

MSU Mobility Center: MSU Green Mobility App

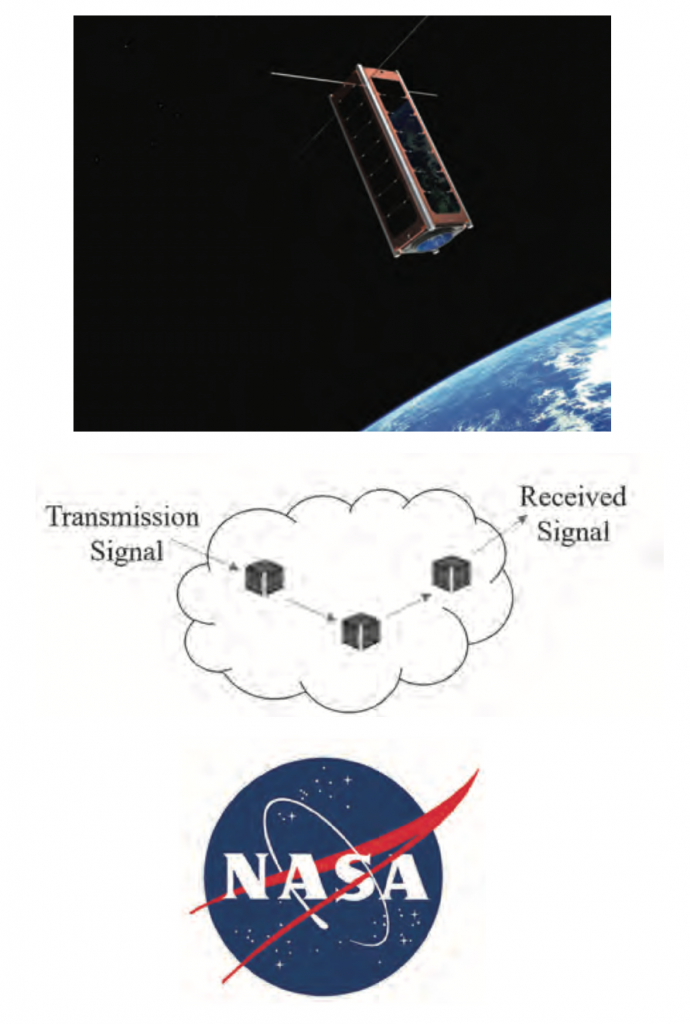

NASA: Solar System Communication Network

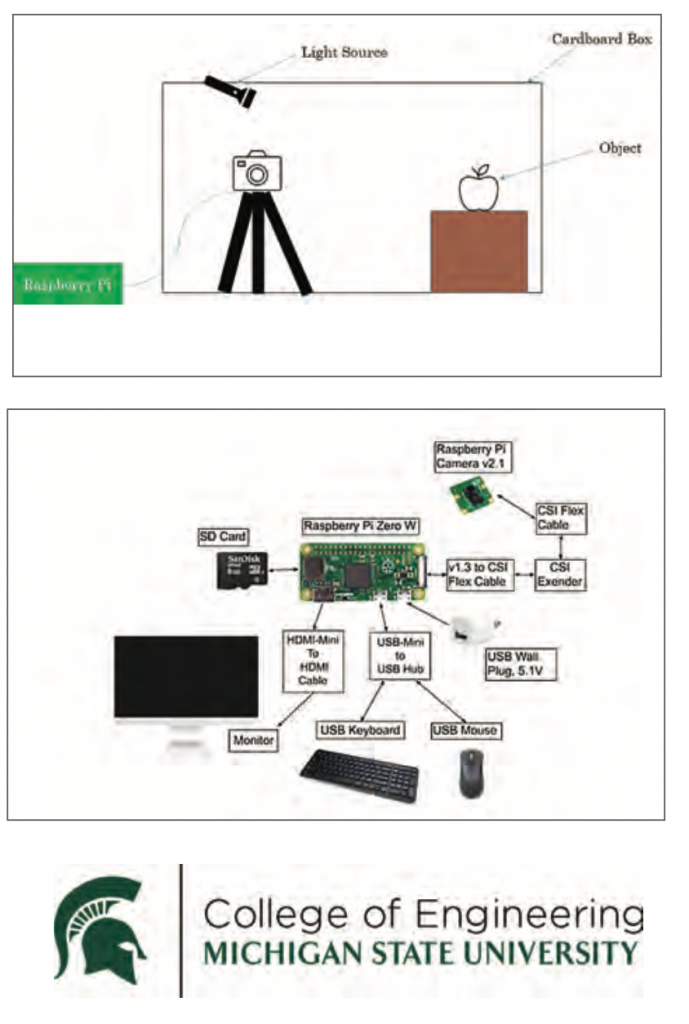

MSU Department of Electrical & Computer Engineering: Diffuser Camera

Michigan State University Solar Racing Team Vehicle Race Data Telemetry System

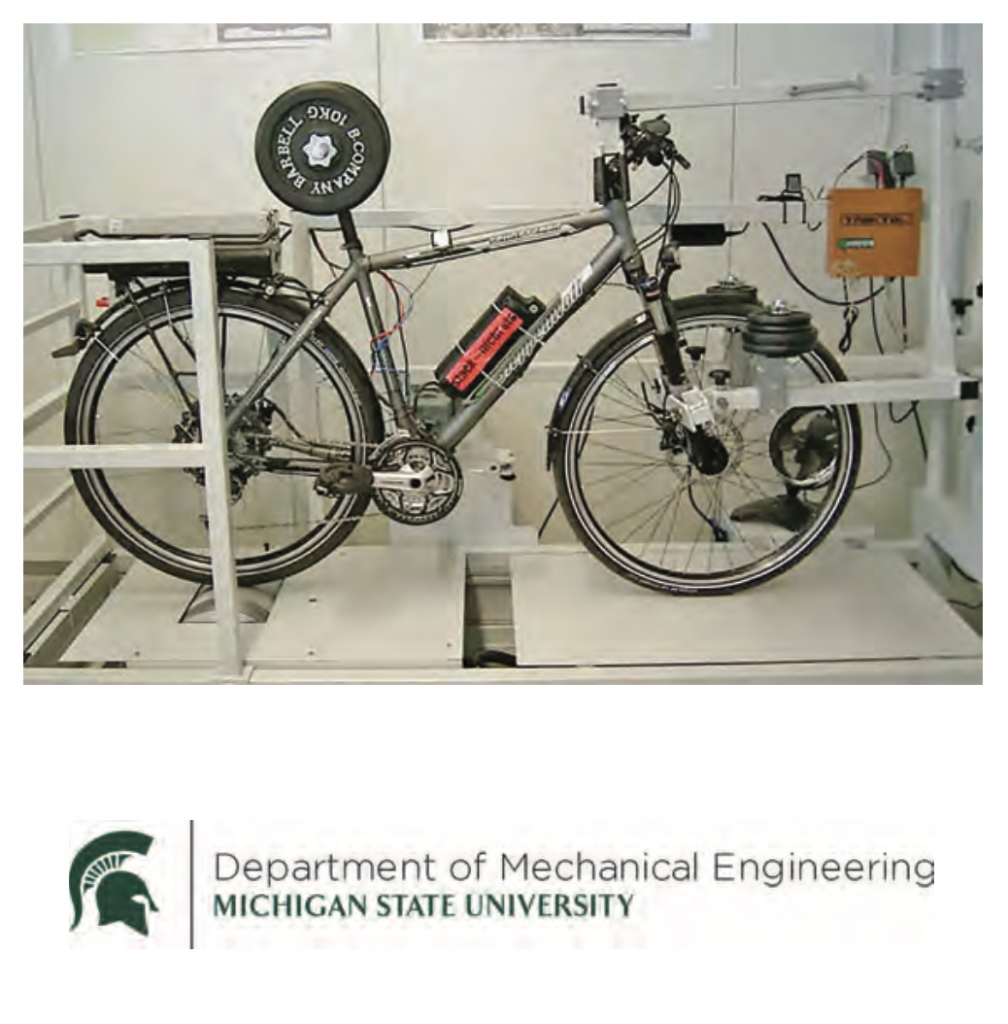

MSU Department of Mechanical Engineering E-Bike Dynamometer

Texas Instruments: Classification with Millimeter-Wave Sensors

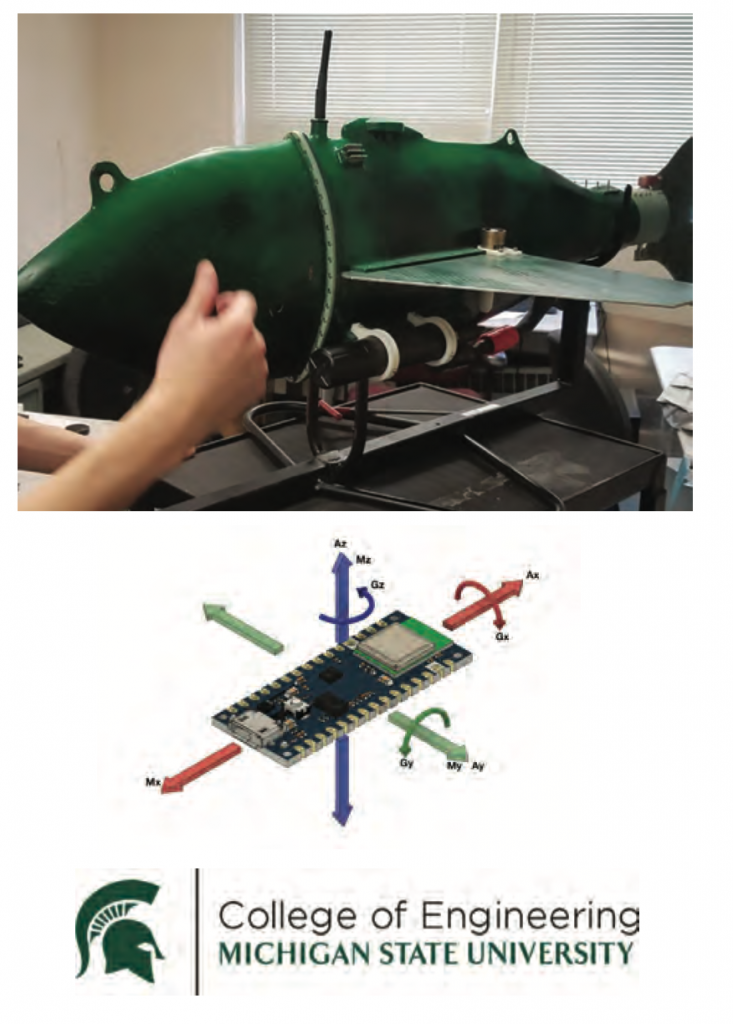

Smart Microsystems Laboratory: IMU-Based Navigation with Dead Reckoning

Established in Fall 2004, the mission of Smart Microsystems Laboratory (SML) is to enable smarter, smaller, integrated systems by merging advanced modeling, control and design methodologies with novel materials and fabrication processes. Their research spans the general areas of control, dynamics, robotics, mechatronics, and smart materials. Research in SML is focused on electroactive polymer sensors and actuators, modeling and control of smart materials, soft robotics, bio-inspired underwater robots, and underwater mobile sensing.

Vast development of global network makes today’s world seem minuscule. However, there are still many instances where signals can be lost while a strong barrier is present between the source and the receiver, causing an object’s positions to be inaccurately measured. A solution to this could be the addition of a hardware component that predicts the position based on the object’s movements.

Our group was tasked with creating an Inertial Measurement Unit-based dead reckoning system. Inertial Measurement Units (IMUs) are electronic devices that measure an object’s force, angular rate and orientation using a combination of tools like gyroscopes and accelerometers. When GPS signal is lost and an absolute position cannot be achieved, an estimated location can be determined using an IMU and a technique known as dead reckoning. Over long periods of time, dead reckoning cannot be relied upon for proper positioning and will produce inaccurate location estimates. Dead reckoning requires double integration and as a result of this mathematical step, the slightest inaccuracy will be exacerbated. Inexpensive IMUs are inadequate without first filtering the data they produce. Common techniques for fixing this data include Extended Kalman and complementary filtering.

The system is being designed for use in a robotic fish that is owned by the Smart Microsystems Laboratory.

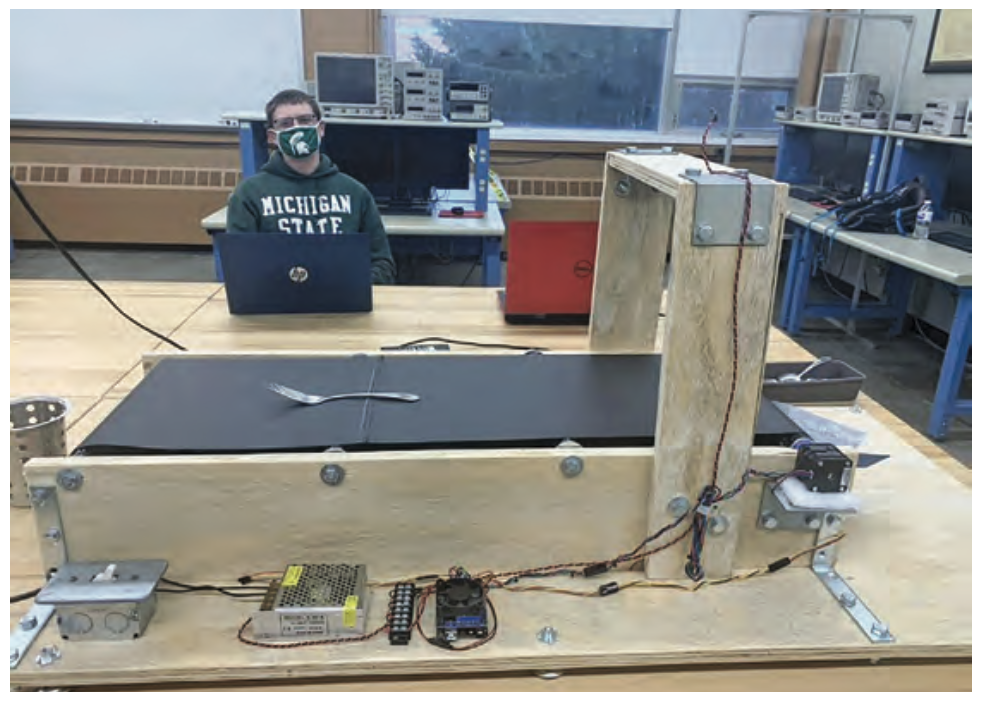

MSU Office of the VP for Auxiliary Enterprises: Robotics in a Residence Hall Dish Room 3.0

The Michigan State University’s Brody Cafeteria provides roughly 1.6 million meals per year.

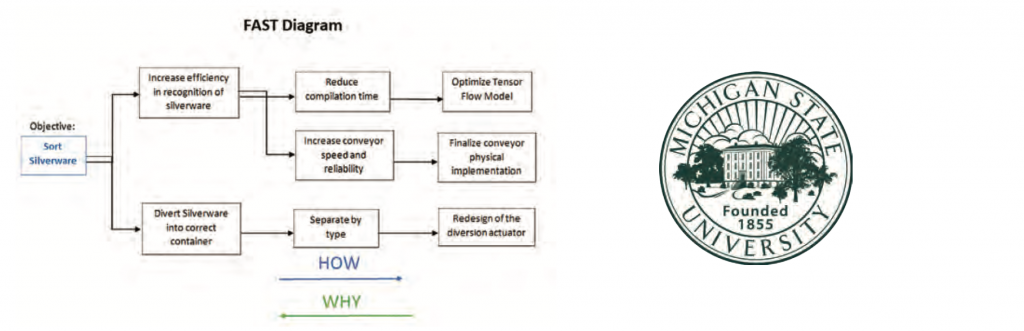

The current process of sorting silverware can occupy several employees at a time in a wet and humid environment. With Brody Cafeteria being short-staffed, this task can cause mayhem in the work environment. Using automation, staff members can divert their energy elsewhere, thus creating a better work environment.

Our project, which is a continuation of a Spring 2020 project, is to improve an existing robot that will help reduce human error when washing and sorting the silverware.

There are a few technical problems that still need to be addressed: improving the efficiency and accuracy of the sorting process by developing automated object recognition software, and redesigning the robotic system to remedy the conveyor belt slipping issue.

MSU Resource Center for Persons with Disabilities: Roadside Electric Scooter Detection and Alert System

The introduction of electric scooters on Michigan State’s campus is a huge convenience for many students. However, these scooters have created a real danger to visually impaired people on campus. While trained assistance animals may be able to detect and avoid these new walkway obstacles, people who use canes may not know they are there until it is too late.

When presented with this problem by the project sponsor (partnered with the scooter company Gotcha), our team began working on a solution. We recognize the usefulness of scooters on campus, which solves the problem of affordable and fast individual transport, but we were certain we could find a solution that did not create a danger to the visually impaired. After all, a true solution is one that does not create other problems.

The solution is to use a combination of GPS and Bluetooth technology. We have implemented our own hardware for research and development purposes. This way, Gotcha would not be open to any additional security risk during the development process, and we would be able to create software to match the original design concept. To accomplish this, a device consisting of a Bluetooth receiver and a speaker needed to be constructed. Our application was then able to communicate with this device by sending a Bluetooth signal indicating that the scooter should emit a chirping noise.

By creating an easily heard noise when approached by a visually impaired person, the scooter is easily detected and avoided. This greatly reduces the possibility of collision and injury.

Consumers Energy: Simulation to Optimize Power Grid Performance

Consumers Energy (CE) is preparing for an increase in Distributed Energy Resources (DER) penetration on its Low Voltage Distribution system. With the increase in distributed resources, such as Solar and Battery Storage, CE is planning new methods of management and control for two-way power flows.

Our project is to assist CE in their pursuit of increasing their DER penetration on the Low Voltage Distribution system by developing and executing a series of power grid simulations utilizing different DER placement designs, while staying within different constraints in order to optimize the power grid’s performance over a set of different criteria.

This set of criteria includes, but is not limited to, voltage, load, and frequency management, contingency analysis, islanding scenarios, and managing external constraints such as cost.

The simulations run during this project are modeled off the real-world design of a solar garden in Kalamazoo and are based on the work done by a Spring 2020 design team.

In addition to developing and running optimization simulations, the project also includes developing a genetic algorithm to aid in finding the optimal parameters for Volt-VAr curves for use in Voltage Management optimization scenarios.

MSU Department of Electrical & Computer Engineering: Human-Aerial-Swarm Interaction System

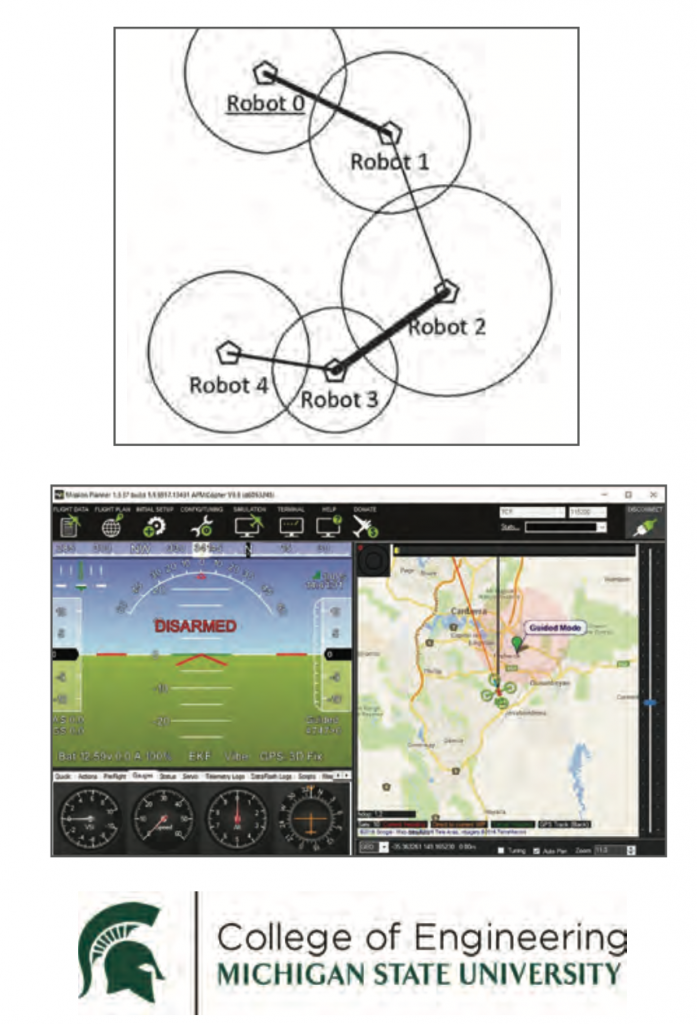

The main objective of this project is to design and control a swarm of aerial robots in a moving formation with the capability of performing different maneuvers. The swarm can be simulated in any state-of-the-art software. The team has chosen to utilize Mission Planner for both controlling the drones, as well as simulation. One of the simulated drones will be assigned as the leader, while the rest of the drones in the swarm will be designated as followers. The flight pattern of the swarm will be controlled by a human pilot operating a joystick/ controller. This controller will then communicate with a computer and simulated swarm through either Bluetooth or Wi-Fi. These objectives integrate well with Mission Planner, as the software has a built-in swarm function, as well as controller support and Bluetooth compatibility. Many of the calculations and signals sent to the drones will also be conducted through written Python scripts, which can be uploaded to Mission Planner and run in parallel with simulation.

In addition to the simulation aspect of the project, our team has also decided to construct two physical drones. This was decided in order to achieve a physical realization of the leader-follower protocol that will also be implemented in simulation. These physical drones will still be operated with user input through Mission Planner, Python scripts, and a controller.

Upon the completion of this project, we plan to showcase the ability of the software to take user input in terms of desired formation and the actual rendering of aerial team motion, as well as the effectiveness of the joystick interface, which should be smooth with little to no delay in action execution. We also plan to showcase both the simulation aspect with multiple formations of drone swarms, as well as the physical realization with one leader drone and one follower drone.

MSU Human Augmentation Technologies Lab (HATlab): Platform to Assess Tactile Communication Pathways

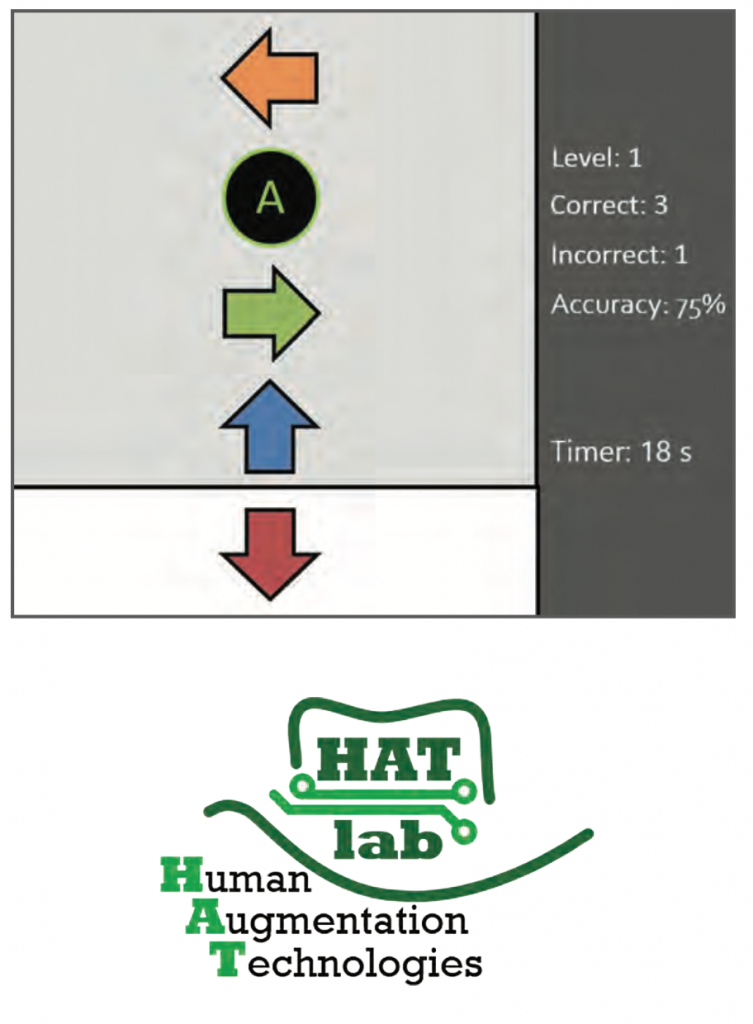

The Human Augmentation Laboratory, or HATlab, at Michigan State University seeks to develop integrated circuits and microsystem approaches that branch between nano- and micro- sensor technologies and macroscale biomedical and environmental applications. By focusing on autonomous sensory microsystems, HATlab aims to design devices which assist with human health and safety.

HATlab is currently researching a new device which bypasses visual and audio mediums, relying solely on bioelectrical impulses to stimulate tactile sensor pathways. This device could be used in a variety of situations, including amputee prosthetics or arthritis and nerve damage treatments.

As a stepping-stone for their new device, HATlab designed a project to create an application which utilizes tactile feedback through controllers in lieu of bioelectrical impulses. Additionally, the project includes the design of a sequential task game, or STG, for testing the application created. The application will include a method of transmitting a signal to two remote locations to test interpretation of tactile feedback.

The solution includes two applications, an “observer” and an “actor.” The observer application consists of a sequenced input game as well as the relevant testing information, an example of which is displayed to the right. The actor application consists of relevant testing information, as well as visuals of signals for each of the potential buttons available at a given level. The two applications are connected by a cloud service, integrated into the game. This enables the transmission of the button pressed by the observer, which then vibrates the controller of the actor. The actor then interprets the vibration signal and presses the corresponding button. This solution allows the STG to be quickly adapted to read bioelectrical impulses and be tested with the new device HATlab is creating.

MSU Mobility Center: MSU Green Mobility App

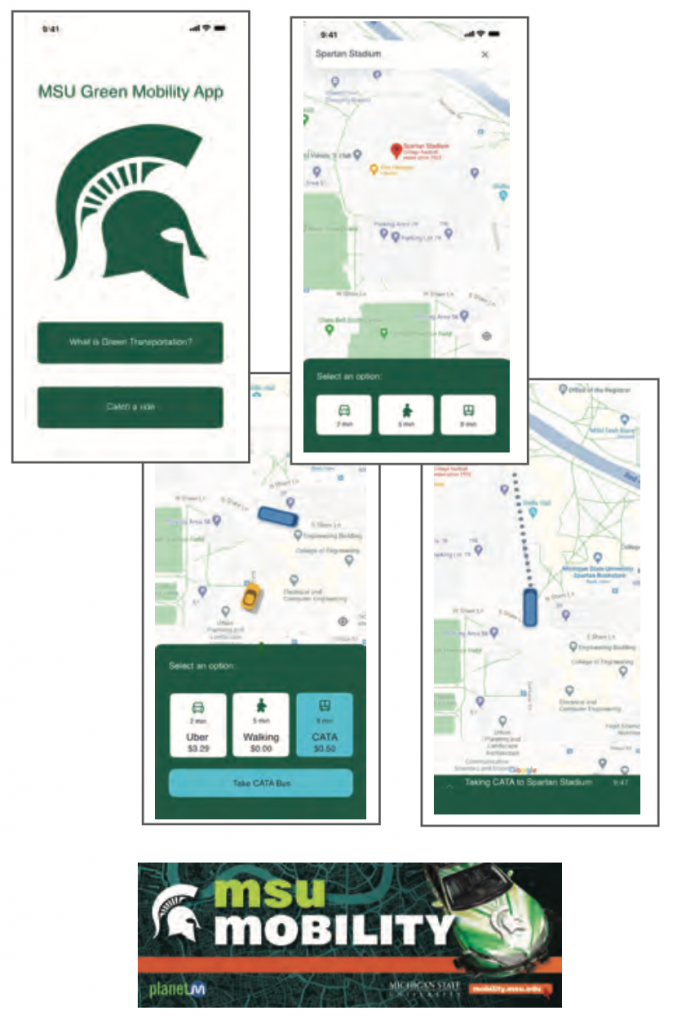

MSU Mobility is a group on campus with a goal of becoming a primary source for research and development that revolve around the concept of human-centric multi modal mobility. MSU Mobility focuses on creating systems of communication and transportation that provide knowledge and safety to all students on campus.

MSU Mobility has been researching sociomobility and the use of autonomous vehicles to connect to a larger part of the transportation system, and to try and become a greener community. With partners like MSU Bikes, MSU Mobility is focusing on becoming more eco- friendly with all transportation on campus.

Our application, MSU Green Mobility App, puts together everything MSU Mobility is about, along with providing more “Green” modes of transportation. Green transportation is any form of transport that does not use or rely on the burning of natural resources. Green transportation helps preserve our environment and ecosystems.

The MSU Green Mobility App will provide users with an easy-to- use interactive map providing green modes of transportation around campus. By connecting to already existing apps and companies like Gotcha and the CATA bus service, users will be able to find the “greenest” mode of transportation available. The user will be able to see how far their destination is, how long it will take to get there and how “green” their mode of transportation is.

Whether the user decides to walk, bike, take an e-scooter or take the bus, all options will be available to view.

The goal of the MSU Green Mobility app is to provide users with a simple, yet effective way, to navigate campus in the most environmentally friendly way possible.

NASA: Solar System Communication Network

The National Aeronautics and Space Administration (NASA) is an independent agency of the United States Federal Government that was established in 1958. NASA is responsible for the civilian space program, along with space and aeronautics research. The civilian rocketry and spacecraft propulsion research for NASA is conducted at the Marshall Space Flight Center (MSFC).

With expanding space research and technology, it has become essential to create a network of nanosatellites for communicating between satellites and Earth. Partnering with the MSFC, our team was tasked with designing a communication relay 6U CubeSat that is able to receive and transmit information at a distance of 1000 km. The design of the 6U CubeSat was based on the limiting size and weight constraints placed upon the satellite while meeting the technical requirements.

The blueprint our group created for the design of the satellite targeted three key technical areas: power, communication, and computation. The trade study conducted by our team highlights the optimal conditions and hardware to provide a communication network of 6U CubeSats that transmits and receives data, such as telemetry and images.

Components of the communication system for the CubeSat include utilizing proper signal processing techniques and selecting an antenna system that meets signal frequency requirements. The hardware components of the 6U CubeSat, including the flight computer and communication system circuitry, end up creating power requirements that will be met by the satellite’s solar cells. Our overall design of the 6U CubeSat harmoniously incorporates all necessary components, while meeting the challenging conditions presented by outer space and complying with standard regulations.

MSU Department of Electrical & Computer Engineering: Diffuser Camera

Nearly all modern-day camera sensors are paired with a lens, and light is concentrated through the lens onto the sensor uniformly. However, these lenses are expensive, large, and heavy. Recent research has shown diffusers are able to be used in place of a lens by applying image processing techniques. This allows diffuser cameras to be made cheaper, smaller, and lighter than their lensed counterparts. Our sponsor Ryan Ashbaugh, a graduate student at Michigan State University, was intrigued by these findings and designed a project to further develop this new imaging system.

Although diffuser cameras are not that practical for commercial picture taking, they do have a variety of other uses and functions that normal lens cameras cannot perform. For example, since the diffuser camera uses a rolling shutter to capture a line of the image at a time, we can essentially recreate a short video from a 2D image. The camera is also very good at recreating a 3D model from a 2D image. This is due to the fact that our camera does not use a lens. Lensed cameras have one focus plane, meaning things that are out of focus are blurred, whereas our camera does not have this blurring effect. This allows us to get depth information from the whole image. So, by using compressed sensing to make some assumption about the photo, we can reconstruct a 3D image.

This allows the technology to be very useful in the field of microscopy, more specifically in neural activity tracking. Currently researchers use giant cumbersome microscopes to do neural tracking, but the diffuser camera’s size will allow them to implant the device directly onto their subject’s brain for testing, making the process of studying neural activity more efficient and easier.

For our project, we plan to expand upon the current research and knowledge of diffuser cameras to create a device that has improved resolution. To accomplish this, we are going to try using different types of diffusers as well as more advanced algorithms.

Michigan State University Solar Racing Team: Vehicle Race Data Telemetry System

Areal-time telemetry system is vital to all automotive races. With a real-time telemetry system implemented, pit lane crews can monitor vehicle data such as battery temperature and voltage on the go with only a laptop in hand. In solar car racing, every milliwatt of energy is crucial and can be the difference between a win and a loss. Designing a telemetry system that can continuously deliver data with a robust connection and wide bandwidth from the racecar to the pit lane is challenging with limited budgets and time.

To strike a balance between design time, budget, and energy consumption, our team decided to implement the race data telemetry system with a LoRa module, a low power, wide area network communication protocol. The LoRa module has several merits, including low power consumption, stable connection between receiving and transmitting nodes, and long-range (1.2~1.8 miles) capability. The LoRa module connects to an Arduino board that is programmed to process CAN data from the race vehicle CAN bus.

The team designed the telemetry module to be a “Plug and Go” device installed onto the racecar without any existing system redesign. The LoRa transmitter is on the racecar while the receiver is in the pit. Due to the LoRa module’s nature, the module’s transmitter can be relatively small, causing little to no effect to the existing vehicle structure. The receiving LoRa module will be equipped with a large antenna to maximize the vehicle’s connection during laps.

Lastly, with the data acquired on the laptop, an Excel sheet will process all the incoming data and further visualize the data into real-time updating graphs. The graphs will store on the laptop for later review for the team and the driver. We believe that with this telemetry system installed on the Michigan State University solar racecar, MSU SRT will be adding another strong ability to its arsenal.

MSU Department of Mechanical Engineering: E-Bike Dynamometer

Our team is comprised of Michigan State University Electrical Engineering Department and Mechanical Engineering Department students working together on a project involving an electric bike dynamometer. This project is a continuation of a project which began in the Fall of 2019. The goal of this project is to measure the power output of the rider input and the motor input and evaluate the data compared to real- world examples. This would include testing conditions such as varying incline simulation and different speed simulations. This will allow for the ability to simulate a balance between the bike motor activation and rider input to create a seamless riding experience

The tasks presented in this project are a continuation of what the previous groups have already completed, to build on the progress, and to make some revisions.

One of the first tasks was to create a stable platform that allows for stable mounting of a bike’s wheels to keep it from shifting while on the dynamometer. The ability to access the bike, via a platform, while it is mounted to the dyno was also needed. Along with this, the mounting of the load cell to the dynamometer to allow for power readings from the rear wheel of the bike needed to be assessed. The revision of the coding to run the motor and gather readings was also investigated.

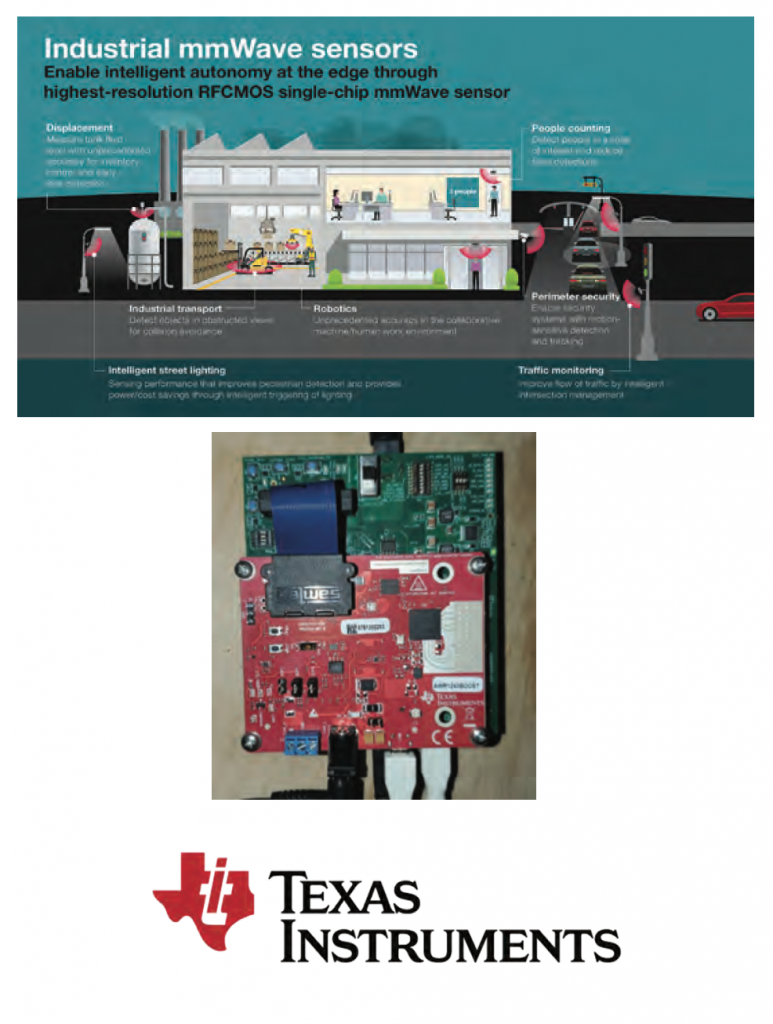

Texas Instruments: Classification with Millimeter-Wave Sensors

Millimeter waves, as classified by the International Telecommunication Union, are EM waves with frequencies ranging from 30 to 300 GHz. In use today most commonly as a communication frequency, mostly for short- range high-bandwidth data transfer, there are other applications for using mmWave. These include radar in weapon systems, autonomous vehicle sensors, and the scanners in place at security checkpoints across the US.

For this project, we will be using a board donated by Texas Instruments which operates between 60 and 64 GHz and is ideal for object identification and tracking in indoor environments. For this project we will be using this board to detect people and distinguish humans from other sources of movement in home and office environments.

A radar system transmits an electromagnetic wave signal that is reflected by objects in its path. MmWave sensing is a unique category of radar systems technology. The captured reflected signal is used to determine the range, velocity, and the angle of arrival of the objects. Using these data sets, our goal with this project is to create an algorithm we can use to distinguish and separate people from background information.

We will leverage work already done by TI in their mmWave SDK and mmWave Studio, along with some example code to help us with the tracking layer of processing. Using sample datasets captured by our team, we will train a machine learning algorithm to identify humans, distinguish between multiple humans in a scene, and separate human targets from non-human moving objects in the scene. The goal is to have a working algorithm that can reliably detect and track two humans doing different tasks and identify a third non-human dynamic object as inanimate.